Where does your enterprise stand on the AI adoption curve? Take our AI survey to discover out.

Deep neural networks have gained fame for their capability to procedure visual information and facts. And in the previous couple of years, they have turn out to be a crucial element of many computer vision applications.

Among the crucial difficulties neural networks can resolve is detecting and localizing objects in pictures. Object detection is employed in lots of unique domains, including autonomous driving, video surveillance, and healthcare.

In this post, I will briefly evaluation the deep finding out architectures that aid computer systems detect objects.

Convolutional neural networks

One of the crucial elements of most deep learning–based laptop vision applications is the convolutional neural network (CNN). Invented in the 1980s by deep finding out pioneer Yann LeCun, CNNs are a form of neural network that is effective at capturing patterns in multidimensional spaces. This tends to make CNNs in particular excellent for pictures, although they are used to procedure other varieties of information as well. (To focus on visual information, we’ll think about our convolutional neural networks to be two-dimensional in this report.)

Every convolutional neural network is composed of one or several convolutional layers, a application element that extracts meaningful values from the input image. And each convolution layer is composed of various filters, square matrices that slide across the image and register the weighted sum of pixel values at unique places. Each filter has unique values and extracts unique features from the input image. The output of a convolution layer is a set of “feature maps.”

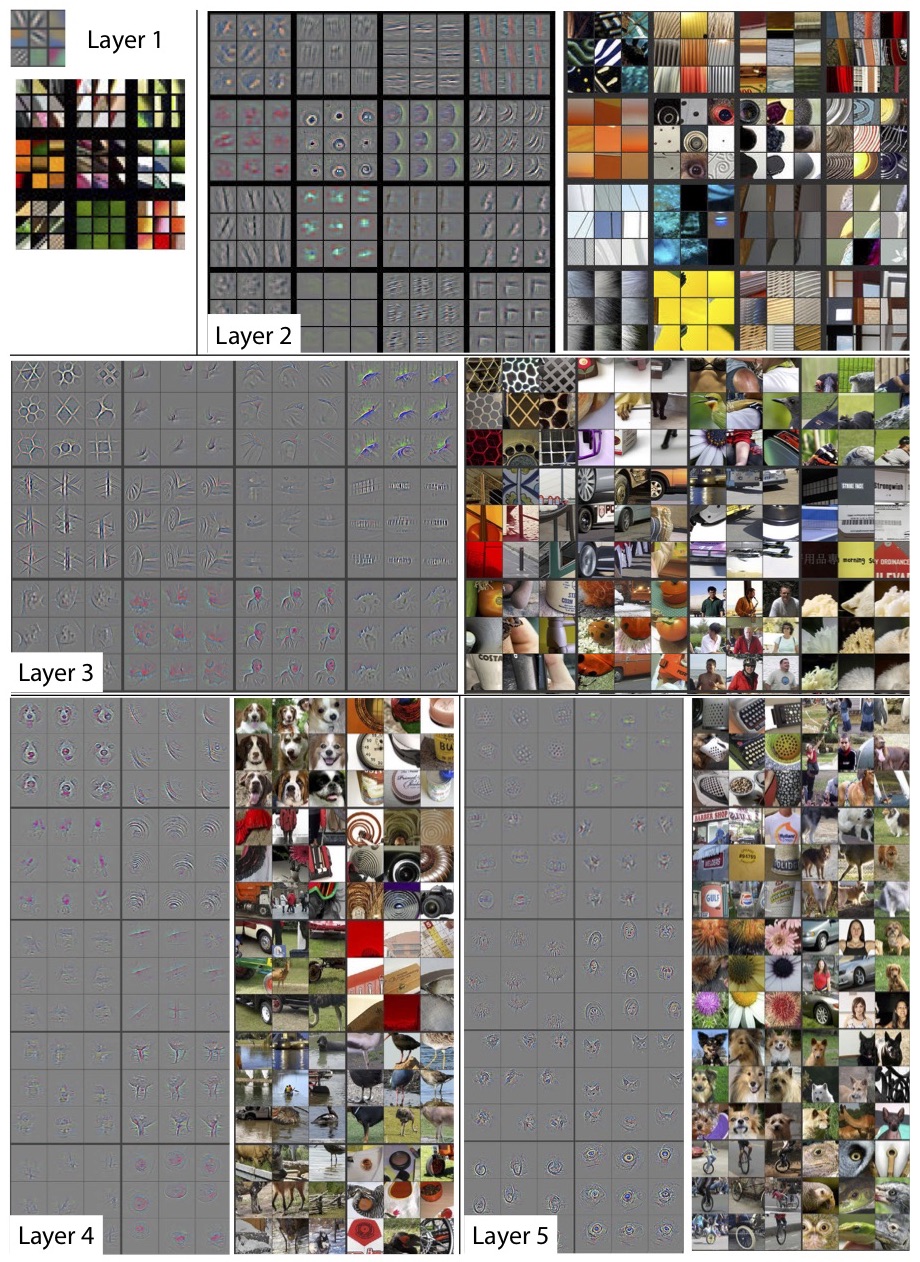

When stacked on prime of each and every other, convolutional layers can detect a hierarchy of visual patterns. For instance, the reduced layers will generate feature maps for vertical and horizontal edges, corners, and other easy patterns. The next layers can detect more complicated patterns such as grids and circles. As you move deeper into the network, the layers will detect complex objects such as vehicles, homes, trees, and individuals.

Most convolutional neural networks use pooling layers to steadily decrease the size of their feature maps and maintain the most prominent components. Max-pooling, which is presently the key form of pooling layer employed in CNNs, keeps the maximum worth in a patch of pixels. For instance, if you use a pooling layer with a size 2, it will take 2×2-pixel patches from the feature maps created by the preceding layer and maintain the highest worth. This operation halves the size of the maps and keeps the most relevant features. Pooling layers allow CNNs to generalize their capabilities and be much less sensitive to the displacement of objects across pictures.

Finally, the output of the convolution layers is flattened into a single dimension matrix that is the numerical representation of the features contained in the image. That matrix is then fed into a series of “fully connected” layers of artificial neurons that map the features to the type of output anticipated from the network.

The most standard process for convolutional neural networks is image classification, in which the network requires an image as input and returns a list of values that represent the probability that the image belongs to one of various classes. For instance, say you want to train a neural network to detect all 1,000 classes of objects contained in the preferred open-supply dataset ImageNet. In that case, your output layer will have 1,000 numerical outputs, each and every of which consists of the probability of the image belonging to one of these classes.

You can constantly generate and test your personal convolutional neural network from scratch. But most machine finding out researchers and developers use one of various attempted and tested convolutional neural networks such as AlexNet, VGG16, and ResNet-50.

Object detection datasets

While an image classification network can inform irrespective of whether an image consists of a particular object or not, it will not say exactly where in the image the object is positioned. Object detection networks provide each the class of objects contained in an image and a bounding box that supplies the coordinates of that object.

Object detection networks bear substantially resemblance to image classification networks and use convolution layers to detect visual features. In truth, most object detection networks use an image classification CNN and repurpose it for object detection.

Object detection is a supervised machine learning problem, which indicates you need to train your models on labeled examples. Each image in the coaching dataset need to be accompanied with a file that incorporates the boundaries and classes of the objects it consists of. There are various open-supply tools that generate object detection annotations.

The object detection network is educated on the annotated information till it can discover regions in pictures that correspond to each and every type of object.

Now let’s look at a couple of object-detection neural network architectures.

The R-CNN deep finding out model

The Region-based Convolutional Neural Network (R-CNN) was proposed by AI researchers at the University of California, Berkley, in 2014. The R-CNN is composed of 3 crucial elements.

First, a area selector makes use of “selective search,” algorithm that discover regions of pixels in the image that may possibly represent objects, also named “regions of interest” (RoI). The area selector generates about 2,000 regions of interest for each and every image.

Next, the RoIs are warped into a predefined size and passed on to a convolutional neural network. The CNN processes each area separately extracts the features via a series of convolution operations. The CNN makes use of completely connected layers to encode the feature maps into a single-dimensional vector of numerical values.

Finally, a classifier machine finding out model maps the encoded features obtained from the CNN to the output classes. The classifier has a separate output class for “background,” which corresponds to something that is not an object.

The original R-CNN paper suggests the AlexNet convolutional neural network for feature extraction and a assistance vector machine (SVM) for classification. But in the years because the paper was published, researchers have employed newer network architectures and classification models to boost the overall performance of R-CNN.

R-CNN suffers from a couple of difficulties. First, the model need to create and crop 2,000 separate regions for each and every image, which can take really a whilst. Second, the model need to compute the features for each and every of the 2,000 regions separately. This amounts to a lot of calculations and slows down the procedure, generating R-CNN unsuitable for actual-time object detection. And lastly, the model is composed of 3 separate elements, which tends to make it really hard to integrate computations and boost speed.

Fast R-CNN

In 2015, the lead author of the R-CNN paper proposed a new architecture called Fast R-CNN, which solved some of the difficulties of its predecessor. Fast R-CNN brings feature extraction and area choice into a single machine finding out model.

Fast R-CNN receives an image and a set of RoIs and returns a list of bounding boxes and classes of the objects detected in the image.

One of the crucial innovations in Fast R-CNN was the “RoI pooling layer,” an operation that requires CNN feature maps and regions of interest for an image and supplies the corresponding features for each and every area. This permitted Fast R-CNN to extract features for all the regions of interest in the image in a single pass as opposed to R-CNN, which processed each and every area separately. This resulted in a considerable enhance in speed.

However, one challenge remained unsolved. Fast R-CNN nevertheless necessary the regions of the image to be extracted and supplied as input to the model. Fast R-CNN was nevertheless not prepared for actual-time object detection.

Faster R-CNN

Faster R-CNN, introduced in 2016, solves the final piece of the object-detection puzzle by integrating the area extraction mechanism into the object detection network.

Faster R-CNN requires an image as input and returns a list of object classes and their corresponding bounding boxes.

The architecture of Faster R-CNN is largely comparable to that of Fast R-CNN. Its key innovation is the “region proposal network” (RPN), a element that requires the feature maps created by a convolutional neural network and proposes a set of bounding boxes exactly where objects may possibly be positioned. The proposed regions are then passed to the RoI pooling layer. The rest of the procedure is comparable to Fast R-CNN.

By integrating area detection into the key neural network architecture, Faster R-CNN achieves close to-actual-time object detection speed.

YOLO

In 2016, researchers at Washington University, Allen Institute for AI, and Facebook AI Research proposed “You Only Look Once” (YOLO), a family of neural networks that enhanced the speed and accuracy of object detection with deep finding out.

The key improvement in YOLO is the integration of the whole object detection and classification procedure in a single network. Instead of extracting features and regions separately, YOLO performs every little thing in a single pass via a single network, therefore the name “You Only Look Once.”

YOLO can carry out object detection at video streaming framerates and is appropriate applications that call for actual-time inference.

In the previous couple of years, deep finding out object detection has come a lengthy way, evolving from a patchwork of unique elements to a single neural network that performs effectively. Today, lots of applications use object-detection networks as one of their key elements. It’s in your phone, laptop, automobile, camera, and more. It will be intriguing (and probably creepy) to see what can be accomplished with increasingly sophisticated neural networks.

Ben Dickson is a application engineer and the founder of TechTalks, a weblog that explores the approaches technologies is solving and developing difficulties.

This story initially appeared on Bdtechtalks.com. Copyright 2021

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547838/YAKZA_3840_2160_A_Elogo.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547226/1242875577.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25546751/ES601_WEBR_GalleryImages_KitchenCounterLineUp_2048x2048.jpg)