The Transform Technology Summits begin October 13th with Low-Code/No Code: Enabling Enterprise Agility. Register now!

Increasingly, AI is getting pitched as a way to avert the estimated more than 340 million workplace accidents that happen worldwide every single day. Using machine finding out, startups are analyzing camera feeds from industrial and manufacturing facilities to spot unsafe behaviors, alerting managers when personnel make a harmful error.

But when promoting supplies breathlessly highlight their life-saving possible, the technologies threaten to violate the privacy of workers who are not conscious their movements are getting analyzed. Companies could disclose to employees that they’re subjected to video surveillance in the workplace, but it is unclear irrespective of whether these deploying — or supplying — AI-powered wellness and security platforms are totally transparent about the tools’ capabilities.

Computer vision

The majority of AI-powered wellness and security platforms for workplaces leverage pc vision to determine possible hazards in genuine time. Fed hand-labeled photos from cameras, the net, and other sources, the systems study to distinguish involving protected and unsafe events — like when a worker methods as well close to a higher-stress valve.

For instance, Everguard.ai, an Irvine, California-based joint venture backed by Boston Consulting Group and SeAH, claims its Sentri360 solution lowers incidents and injuries utilizing a mixture of AI, pc vision, and industrial world wide web of factor devices (IIoT). The company’s platform, which was created for the steel market, ostensibly learns “on the job,” enhancing security and productivity as it adapts to new environments.

“Before the worker walks too close to the truck or load in the process, computer vision cameras capture and collect data, analyze the data, recognize the potential hazard, and within seconds (at most), notify both the worker and the operator to stop via a wearable device,” the firm explains in a current weblog post. “Because of the routine nature of the task, the operator and the worker may have been distracted causing either or both to become unaware of their surroundings.”

But Everguard does not disclose on its web page how it educated its pc vision algorithms or irrespective of whether it retains any recordings of workers. In lieu of this facts, how — or irrespective of whether — the firm guarantees information remains anonymous is an open query, as is irrespective of whether Everguard needs its shoppers to notify their personnel be notified their movements are analyzed.

“By virtue of data gathering in such diverse settings, Everguard.ai naturally has a deep collection of images, video, and telemetry from ethnographically and demographically diverse worker communities. This diverse domain specific data is combined from bias-sensitive public sources to make the models more robust,” Everguard CEO Sandeep Pandya told VentureBeat by means of e-mail. “Finally, industrial workers tend to standardize on protective equipment and uniforms, so there is an alignment around worker images globally depending on vertical — e.g. steel workers in various countries tend to have similar ‘looks’ from a computer vision perspective.”

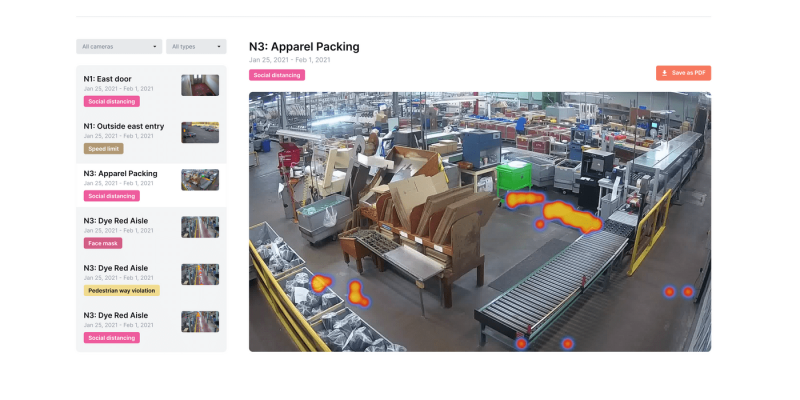

Everguard competitor Intenseye, a 32-individual firm that is raised $29 million in venture capital, similarly integrates with current cameras and utilizes pc vision to monitor personnel on the job. Incorporating federal and nearby workplace security laws as properly as organizations’ guidelines, Intenseye can determine 35 types of scenarios inside workplaces, which includes the presence of private protective gear, region and automobile controls, housekeeping, and numerous pandemic manage measures.

“Intenseye’s computer vision models are trained to detect … employee health and safety incidents that human inspectors cannot possibly see in real time. The system detects compliant behaviors to track real-time compliance scores for all use cases and locations,” CEO Sercan Esen told VentureBeat by means of e-mail. “The system is live across over 15 countries and 40 cities, having already detected over 1.8 million unsafe acts in 18 months.”

Image Credit: Intenseye

When Intenseye spots a violation, wellness and security experts get an alert quickly by means of text, wise speaker, wise device, or e-mail. The platform also requires an aggregate of compliance inside a facility to create a score and diagnose possible challenge regions.

Unlike Everguard, Intenseye is transparent about how it treats and retains information. On its web page, the firm writes: “Camera feed is processed and deleted on the fly and never stored. Our system never identifies people, nor stores identities. All the output is anonymized and aggregated and reported by our dashboard and API as visual or tabular data. We don’t rely on facial recognition, instead taking in visual cues from all features across the body.”

“Our main priority at intenseye is to help save lives but a close second is to ensure that workers’ privacy is protected,” Esen added. “Our AI model is built to blur out the faces of workers to ensure anonymity. Privacy is, and will continue to be, a top priority for Intenseye and it is something that we will not waiver on.”

San Francisco, California-based Protex AI claims its workplace monitoring application is “privacy-preserving,” plugging into current CCTV infrastructure to determine regions of higher threat based on guidelines. But public facts is scarce. On its web page, Protex AI does not detail the methods it is taken to anonymize information, or clarify irrespective of whether it utilizes the information to fine-tune algorithms for other shoppers.

Training pc vision models

Computer vision algorithms demand lots of education information. That’s not a challenge in domains with lots of examples, like apparel, pets, homes, and meals. But when pictures of the events or objects an algorithm is getting educated to detect are sparse, it becomes more difficult to create a technique that is extremely generalizable. Training models on smaller datasets with no sufficiently diverse examples runs the threat of overfitting, exactly where the algorithm cannot carry out accurately against unseen information.

Fine-tuning can address this “domain gap” — somewhat. In machine finding out, fine-tuning requires producing smaller adjustments to enhance the efficiency of an AI algorithm in a distinct atmosphere. For instance, a pc vision algorithm currently educated on a huge dataset (e.g., cat images) can be tailored to a smaller sized, specialized corpus with domain-particular examples (e.g., images of a cat breed).

Another method to overcome the information sparsity challenge is synthetic information, or information generated by algorithms to supplement genuine-world datasets. Among other individuals, autonomous automobile organizations like Waymo, Aurora, and Cruise use synthetic information to train the perception systems that guide their automobiles along physical roads.

But synthetic information is not the finish-all, be-all. Worst case, it can give rise to undesirable biases in the education datasets. A study carried out by researchers at the University of Virginia located that two prominent study-image collections displayed gender bias in their depiction of sports and other activities, displaying photos of purchasing linked to ladies when associating points like coaching with males. Another pc vision corpus, 80 Million Tiny Images, was located to have a variety of racist, sexist, and otherwise offensive annotations, such as almost 2,000 photos labeled with the N-word, and labels like “rape suspect” and “child molester.”

Image Credit: Protext AI

Bias can arise from other sources, like variations in the sun path involving the northern and southern hemispheres and variations in background scenery. Studies show that even variations involving camera models — e.g., resolution and aspect ratio — can result in an algorithm to be much less helpful in classifying the objects it was educated to detect. Another frequent confounder is technologies and methods that favor lighter skin, which include things like every little thing from sepia-tinged film to low-contrast digital cameras.

Recent history is filled with examples of the consequences of education pc vision models on biased datasets, like virtual backgrounds and automatic photo-cropping tools that disfavor darker-skinned persons. Back in 2015, a application engineer pointed out that the image recognition algorithms in Google Photos had been labeling his Black close friends as “gorillas.” And the nonprofit AlgorithmWatch has shown that Google’s Cloud Vision API at one time automatically labeled thermometers held by a Black individual as “guns” when labeling thermometers held by a light-skinned individual as “electronic devices.”

Proprietary solutions

Startups supplying AI-powered wellness and security platforms are generally reluctant to reveal how they train their algorithms, citing competitors. But the capabilities of their systems hint at the methods that might’ve been used to bring them into production.

For instance, Everguard’s Sentri360, which was initially deployed at SeAH Group steel factories and building web-sites in South Korea and in Irvine and Rialto, California, can draw on many camera feeds to spot workers who are about to stroll below a heavy load getting moved by building gear. Everguard claims that Sentri360 can enhance from knowledge and new pc vision algorithms — for instance, finding out to detect irrespective of whether a worker is wearing a helmet in a dimly lit aspect of a plant.

“A camera can detect if a person is looking in the right direction,” Pandya told Fastmarkets in a current interview.

In the way that wellness and security platforms analyze features like head pose and gait, they’re akin to pc vision-based systems that detect weapons and automatically charge brick-and-mortar shoppers for goods placed in their purchasing carts. Reporting has revealed that some of the organizations building these systems have engaged in questionable behavior, like utilizing CGI simulations and videos of actors — even personnel and contractors — posing with toy guns to feed algorithms made to spot firearms.

Insufficient education leads the systems to carry out poorly. ST Technologies’ facial recognition and weapon-detecting platform was located to misidentify black children at a larger price and often mistook broom handles for guns. Meanwhile, Walmart’s AI- and camera-based anti-shoplifting technologies, which is supplied by Everseen, came below scrutiny last May more than its reportedly poor detection prices.

The stakes are larger in workplaces like factory floors and warehouses. If a technique had been to fail to determine a worker in a potentially hazardous scenario since of their skin colour, for instance, they could be place at threat — assuming they had been conscious the technique was recording them in the initially location.

Mission creep

While the purported target of pc vision-based workplace monitoring goods on the industry is wellness and security, the technologies could be coopted for other, much less humanitarian intents. Many privacy authorities be concerned that they’ll normalize higher levels of surveillance, capturing information about workers’ movements and enabling managers to chastise personnel in the name of productivity.

Each state has its personal surveillance laws, but most give wide discretion to employers so extended as the gear they use to track personnel is plainly visible. There’s also no federal legislation that explicitly prohibits organizations from monitoring their employees through the workday.

“We support the need for data privacy through the use of ‘tokenization’ of sensitive information or image and sensor data that the organization deems proprietary,” Pandya mentioned. “Where personal information must be used in a limited way to support the higher cause or worker safety, e.g. worker safety scoring for long term coaching, the organization ensures their employees are aware of and accepting of the sensor network. Awareness is generated as employees participate in the training and on-boarding that happens as part of post sales-customer success. Regarding duration of data retention, that can vary by customer requirement, but generally customers want to have access to data for a month or more in the event insurance claims and accident reconstruction requires it.”

That has permitted employers like Amazon to adopt algorithms made to track productivity at a granular level. For instance, the tech giant’s notorious “Time Off Task” technique dings warehouse personnel for spending as well a great deal time away from the work they’re assigned to carry out, like scanning barcodes or sorting goods into bins. The needs imposed by these algorithms gave rise to California’s proposed AB-701 legislation, which would avert employers from counting wellness and security law compliance against workers’ productive time.

“I don’t think the likely impacts are necessarily due to the specifics of the technology so much as what the technology ‘does,’” University of Washington pc scientist Os Keyes told VentureBeat by means of e-mail. “[It’s] setting up impossible tensions between the top-down expectations and bottom-up practices … When you look at the kind of blue collar, high-throughput workplaces these companies market towards — meatpacking, warehousing, shipping — you’re looking at environments that are often simply not designed to allow for, say, social distancing, without seriously disrupting workflows. This means that technology becomes at best a constant stream of notifications that management fails to attend to — or at worse, sticks workers in an impossible situation where they have to both follow unrealistic distancing expectations and complete their job, thus providing management a convenient excuse to fire ‘troublemakers.’”

Startups promoting AI-powered wellness and security platforms present a positive spin, pitching the systems as a way to “[help] safety professionals recognize trends and understand the areas that require coaching.” In a weblog post, Everguard notes that its technologies could be used to “reinforce positive behaviors and actions” by way of continual observation. “This data enables leadership to use ‘right behaviors’ to reinforce and help to sustain the expectation of on-the-job safety,” the firm asserted.

But even possible shoppers that stand to advantage, like Big River Steel, are not totally sold on the guarantee. CEO David Stickler told Fastmarkets that he was concerned a technique like the one from Everguard would grow to be a substitute for appropriate worker education and trigger as well lots of unnecessary alerts, which could impede operations and even reduce security.

“We have to make sure people don’t get a false sense of security just because of a new safety software package,” he told the publication, adding: “We want to do rigorous testing under live operating conditions such that false negatives are minimized.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547838/YAKZA_3840_2160_A_Elogo.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547226/1242875577.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25546751/ES601_WEBR_GalleryImages_KitchenCounterLineUp_2048x2048.jpg)