All the sessions from Transform 2021 are accessible on-demand now. Watch now.

DeepMind today detailed its most up-to-date efforts to build AI systems capable of finishing a variety of diverse, special tasks. By designing a virtual atmosphere known as XLand, the Alphabet-backed lab says that it managed to train systems with the capacity to succeed at difficulties and games which includes hide and seek, capture the flag, and locating objects, some of which they didn’t encounter in the course of instruction.

The AI approach identified as reinforcement studying has shown outstanding possible, enabling systems to understand to play games like chess, shogi, Go, and StarCraft II by way of a repetitive procedure of trial and error. But a lack of instruction information has been one of the significant elements limiting reinforcement learning–trained systems’ behavior getting common sufficient to apply across diverse games. Without getting in a position to train systems on a vast sufficient set of tasks, systems educated with reinforcement studying have been unable to adapt their discovered behaviors to new tasks.

DeepMind developed XLand to address this, which consists of multiplayer games inside constant, “human-relatable” digital worlds. The simulated space makes it possible for for procedurally generated tasks, enabling systems to train on — and produce knowledge from — tasks that are designed programmatically.

XLand provides billions of tasks across varied worlds and players. AI controls players in an atmosphere meant to simulate the physical world, instruction on a quantity of cooperative and competitive games. Each player’s objective is to maximize rewards, and each and every game defines the person rewards for the players.

“These complex, non-linear interactions create an ideal source of data to train on, since sometimes even small changes in the components of the environment can result in large changes in the challenges for the [systems],” DeepMind explains in a weblog post.

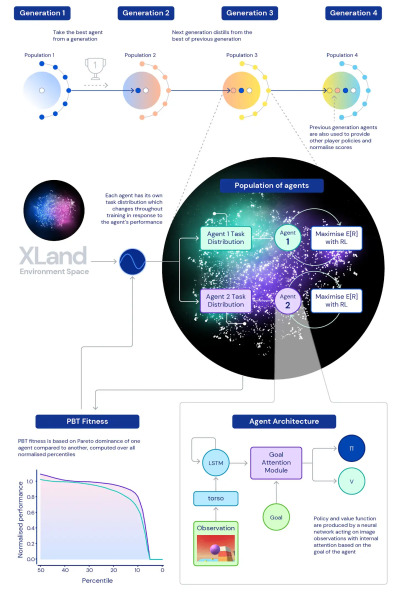

XLand trains systems by dynamically producing tasks in response to the systems’ behavior. The systems’ activity-producing functions evolve to match their relative functionality and robustness, and the generations of systems bootstrap from each and every other — introducing ever-superior players into the multiplayer atmosphere.

DeepMind says that just after instruction systems for 5 generations — 700,000 special games in 4,000 worlds inside XLand, with each and every program experiencing 200 billion instruction measures — they saw constant improvements in each studying and functionality. DeepMind discovered that the systems exhibited common behaviors such as experimentation, like altering the state of the world till they accomplished a rewarding state. Moreover, they observed that the systems have been conscious of the fundamentals of their bodies, the passage of time, and the higher-level structure of the games they encountered.

With just 30 minutes of focused instruction on a newly presented, complicated activity, the systems could rapidly adapt, whereas agents educated with reinforcement studying from scratch couldn’t understand the tasks at all. “DeepMind’s mission of solving intelligence to advance science and humanity led us to explore how we could overcome this limitation to create AI [systems] with more general and adaptive behaviour,” DeepMind mentioned. “Instead of learning one game at a time, these [systems] would be able to react to completely new conditions and play a whole universe of games and tasks, including ones never seen before.”