The Transform Technology Summits start off October 13th with Low-Code/No Code: Enabling Enterprise Agility. Register now!

Graphs are everywhere about us. Your social network is a graph of folks and relations. So is your family. The roads you take to go from point A to point B constitute a graph. The hyperlinks that connect this webpage to other people kind a graph. When your employer pays you, your payment goes via a graph of monetary institutions.

Basically, something that is composed of linked entities can be represented as a graph. Graphs are exceptional tools to visualize relations in between folks, objects, and ideas. Beyond visualizing info, even so, graphs can also be great sources of information to train machine finding out models for difficult tasks.

Graph neural networks (GNN) are a variety of machine finding out algorithm that can extract crucial info from graphs and make valuable predictions. With graphs becoming more pervasive and richer with info, and artificial neural networks becoming more well-known and capable, GNNs have turn into a highly effective tool for several crucial applications.

Transforming graphs for neural network processing

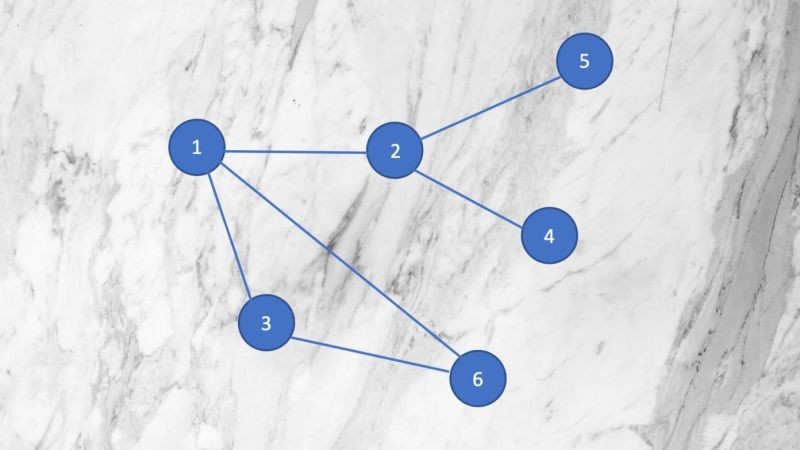

Every graph is composed of nodes and edges. For instance, in a social network, nodes can represent customers and their qualities (e.g., name, gender, age, city), though edges can represent the relations in between the customers. A more complicated social graph can consist of other varieties of nodes, such as cities, sports teams, news outlets, as properly as edges that describe the relations in between the customers and these nodes.

Unfortunately, the graph structure is not properly suited for machine finding out. Neural networks anticipate to get their information in a uniform format. Multi-layer perceptrons anticipate a fixed quantity of input features. Convolutional neural networks anticipate a grid that represents the distinct dimensions of the information they procedure (e.g., width, height, and colour channels of photos).

Graphs can come in distinct structures and sizes, which does not conform to the rectangular arrays that neural networks anticipate. Graphs also have other qualities that make them distinct from the variety of info that classic neural networks are developed for. For instance, graphs are “permutation invariant,” which suggests altering the order and position of nodes does not make a distinction as extended as their relations stay the similar. In contrast, altering the order of pixels final results in a distinct image and will result in the neural network that processes them to behave differently.

To make graphs valuable to deep finding out algorithms, their information ought to be transformed into a format that can be processed by a neural network. The variety of formatting used to represent graph information can differ based on the variety of graph and the intended application, but in common, the crucial is to represent the info as a series of matrices.

For instance, take into consideration a social network graph. The nodes can be represented as a table of user qualities. The node table, exactly where every single row consists of info about one entity (e.g., user, consumer, bank transaction), is the variety of info that you would provide a standard neural network.

But graph neural networks can also study from other info that the graph consists of. The edges, the lines that connect the nodes, can be represented in the similar way, with every single row containing the IDs of the customers and more info such as date of friendship, variety of relationship, and so on. Finally, the common connectivity of the graph can be represented as an adjacency matrix that shows which nodes are connected to every single other.

When all of this info is offered to the neural network, it can extract patterns and insights that go beyond the easy info contained in the person elements of the graph.

Graph embeddings

Graph neural networks can be developed like any other neural network, making use of completely connected layers, convolutional layers, pooling layers, and so on. The variety and quantity of layers rely on the variety and complexity of the graph information and the preferred output.

The GNN receives the formatted graph information as input and produces a vector of numerical values that represent relevant info about nodes and their relations.

This vector representation is known as “graph embedding.” Embeddings are normally made use of in machine finding out to transform difficult info into a structure that can be differentiated and discovered. For instance, organic language processing systems use word embeddings to make numerical representations of words and their relations collectively.

How does the GNN make the graph embedding? When the graph information is passed to the GNN, the features of every single node are combined with these of its neighboring nodes. This is known as “message passing.” If the GNN is composed of more than one layer, then subsequent layers repeat the message-passing operation, gathering information from neighbors of neighbors and aggregating them with the values obtained from the earlier layer. For instance, in a social network, the 1st layer of the GNN would combine the information of the user with these of their buddies, and the next layer would add information from the buddies of buddies and so on. Finally, the output layer of the GNN produces the embedding, which is a vector representation of the node’s information and its expertise of other nodes in the graph.

Interestingly, this procedure is pretty related to how convolutional neural networks extract features from pixel information. Accordingly, one pretty well-known GNN architecture is the graph convolutional neural network (GCN), which utilizes convolution layers to make graph embeddings.

Applications of graph neural networks

Once you have a neural network that can study the embeddings of a graph, you can use it to achieve distinct tasks.

Here are a handful of applications for graph neural networks:

Node classification: One of the highly effective applications of GNNs is adding new info to nodes or filling gaps exactly where info is missing. For instance, say you are operating a social network and you have spotted a handful of bot accounts. Now you want to discover out if there are other bot accounts in your network. You can train a GNN to classify other customers in the social network as “bot” or “not bot” based on how close their graph embeddings are to these of the recognized bots.

Edge prediction: Another way to place GNNs to use is to discover new edges that can add worth to the graph. Going back to our social network, a GNN can discover customers (nodes) who are close to you in embedding space but who are not your buddies however (i.e., there is not an edge connecting you to every single other). These customers can then be introduced to you as buddy ideas.

Clustering: GNNs can glean new structural info from graphs. For instance, in a social network exactly where everybody is in one way or an additional associated to other people (via buddies, or buddies of buddies, and so on.), the GNN can discover nodes that kind clusters in the embedding space. These clusters can point to groups of customers who share related interests, activities, or other inconspicuous qualities, regardless of how close their relations are. Clustering is one of the most important tools made use of in machine learning–based promoting.

Graph neural networks are pretty highly effective tools. They have currently discovered highly effective applications in domains such as route preparing, fraud detection, network optimization, and drug investigation. Wherever there is a graph of associated entities, GNNs can assistance get the most worth from the current information.

Ben Dickson is a computer software engineer and the founder of TechTalks. He writes about technologies, business enterprise, and politics.

This story initially appeared on Bdtechtalks.com. Copyright 2021

/cdn.vox-cdn.com/uploads/chorus_asset/file/22977156/acastro_211101_1777_meta_0002.jpg)