Elevate your enterprise information technologies and approach at Transform 2021.

Nvidia has launched a new version of its Isaac robot simulation engine on its Omniverse, which is the company’s metaverse simulation for engineers.

The Omniverse is a virtual tool that permits engineers to collaborate. It was inspired by the science fiction notion of the metaverse, the universe of virtual worlds that are all interconnected, like in novels such as Snow Crash and Ready Player One. The project began years ago as a proprietary Nvidia project called Holodeck, named immediately after the virtual reality simulation in Star Trek.

But it morphed into a more ambitious business-wide work based on the plumbing made doable by the Universal Scene Description (USD) technologies Pixar created for generating its motion pictures. Nvidia has spent years and hundreds of millions of dollars on the project, and now it is updating its robot simulations for it.

An open beta

The new Isaac simulation engine is now in open beta so providers and designers can test how their robots function in a simulated atmosphere just before they make the commitment of manufacturing the robots, stated Gerard Andrews, senior item advertising and marketing manager at Nvidia, in an interview with VentureBeat.

Andrews showed me some pictures and videos of robots working in a digital factory getting designed by BMW as a “digital twin.” Once the factory style is performed, the digital style will be replicated in the actual world as a physical copy. And now the Isaac-based robots will operate more realistically, based on newly accessible sensors for the robots and more robust simulations.

The simulation not only creates much better photorealistic environments but also streamlines synthetic information generation and domain randomization to construct ground-truth datasets to train robots in applications from logistics and warehouses to factories of the future.

“Isaac Sim is going into open beta. We’ve had an early adopter program, which has reached thousands of developers in hundreds of individual companies,” Andrews stated. “They tried it out and kicked the tires and gave us some good feedback. And we’re proud to take this to the market based on that feedback and a lot of enthusiasm we are seeing from these customers.”

He stated Isaac Sim is a realistic simulation, derived from core technologies such as precise physics, actual-time ray tracing, path tracing, and components that behave like they’re supposed to.

“One of the big problems you have is the sim-to-real gap, where the gap between the virtual world and the real world — if it exceeds a certain amount — then the engineers or developers just won’t use simulation,” Andrews stated. “They’ll just abandon it and say is not working.”

Andrews stated the Isaac Sim operating on Omniverse will be a game-changer in the utility of simulators. And he stated the simulation has to be fantastic adequate that it is worth the time it requires to understand how to use the tools for the simulation.

“A lot of the use cases we have around manipulation robots, navigating robots, generating synthetic data to train the AI in those robots — we have those use cases built into Isaacs already,” Andrews stated. “And then finally, the big benefit that we get from being a part of the Omniverse platform is seamless connectivity and interoperability with all these other tools that people may be using in their 3D workloads. We can bring those assets into our simulation environment where we’re developing the robot, training the robot, or testing the robot.”

The Omniverse and Isaac

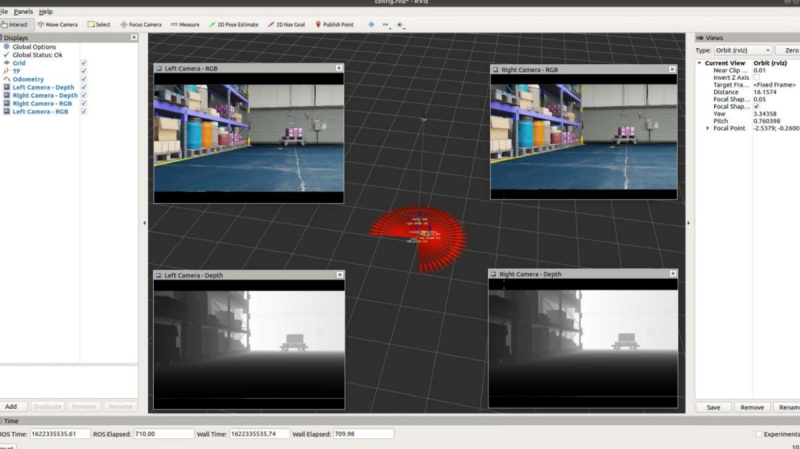

Image Credit: Nvidia

The Omniverse is the underlying foundation for Nvidia’s simulators, which includes the Isaac platform — which now involves quite a few new features.

Built on the Nvidia Omniverse platform, Isaac Sim is a robotics simulation application and synthetic information generation tool. It permits roboticists to train and test their robots more effectively by supplying a realistic simulation of the robot interacting with compelling environments that can expand coverage beyond what is doable in the actual world.

This release of Isaac Sim also adds enhanced multi-camera assistance and sensor capabilities, and a PTC OnShape CAD importer to make it less complicated to bring in 3D assets. These new features will expand the breadth of robots and environments that can be effectively modeled and deployed in every single aspect: from style and development of the physical robot, then instruction the robot, to deploying in a “digital twin” in which the robot is simulated and tested in an precise and photorealistic virtual atmosphere.

Developers have lengthy seen the added benefits of obtaining a effective simulation atmosphere for testing and instruction robots. But all also normally, the simulators have had shortcomings that restricted their adoption. Isaac Sim addresses these drawbacks, Andrews stated.

Realistic simulation

Image Credit: Nvidia

I was hunting at the pictures of Isaac robots in the press material, and I believed they have been photographs. But these are 3D-animated pictures of robots in the Omniverse.

In order to provide realistic robotics simulations, Isaac Sim leverages the Omniverse platform’s effective technologies which includes sophisticated graphics processing unit (GPU)-enabled physics simulation with PhysX 5, photorealism with actual-time ray, and path tracing, and Material Definition Language (MDL) assistance for physically-based rendering.

Isaac Sim is constructed to address numerous of the most widespread robotics use situations which includes manipulation, autonomous navigation, and synthetic information generation for instruction information. Its modular style permits customers to simply customize and extend the toolset to accommodate numerous applications and environments.

“This image is a digital twin of BMWs new factory that their factory planners worked on. They brought it into the Omniverse world. And the cool thing about being in Omniverse is that I can put my simulated robot right in this world, and collect the training data that I’m going to use for my AI models, do my testing, do all sorts of different scenarios. And that’s kind of one of the beauties of being a part of the Omniverse platform,” Anders stated. “I’ve been challenged to come up with a catchy phrase, and I ever really come up with a catchy phrase, but it’s something around the realistic robot models and the complex scenes that they’re going to operate in.”

To me, it is type of like designing merchandise inside one of Pixar’s film worlds, only one that is far more realistic.

With Omniverse, Isaac Sim added benefits from Omniverse Nucleus and Omniverse Connectors, enabling the collaborative constructing, sharing, and importing of environments and robot models in Pixar’s Universal Scene Description (USD) regular. Engineers can simply connect the robot’s brain to a virtual world by means of Isaac SDK and ROS/ROS2 interface, totally-featured Python scripting, plugins for importing robot and atmosphere models.

Synthetic Data Generation is an crucial tool that is increasingly used to train the perception models identified in today’s robots. Getting actual-world, adequately labeled information is a time-consuming and pricey endeavor. But in the case of robotics, some of the needed instruction information could be also challenging or risky to gather in the actual world. This is specially accurate of robots that need to operate in close proximity to humans.

Issac Sim has constructed-in assistance for a range of sensor varieties that are crucial in instruction perception models. These sensors contain RGB, depth, bounding boxes, and segmentation, Andrews stated.

How realistic should really it be?

“You just want to, within reason, close that sim-to-real gap,” Andrews stated. “If you have a small error, that can accumulate in your simulation. It can pick up over time, like an error in physics modeling where you don’t do something right with how the wheels [function], then the first time you simulate it, your robot may be fine. But that error builds up and the robot may find itself completely off course in the real world.”

He added, “The closer you can get into the reality, there’s just a better experience you’re going to have when the engineers try to use it. In the world of simulation, you always face this idea of now that I have the real hardware, what’s the value of still using the simulator.”

Getting much better information

Image Credit: Nvidia

In the open beta, Nvidia has the capability to output synthetic information in the KITTI format. This information can then be made use of straight with the Nvidia Transfer Learning Toolkit to improve model functionality with use case-certain information, Andrews stated.

Domain Randomization varies the parameters that define a simulated scene, such as the lighting, colour and texture of components in the scene. One of the most important objectives of domain randomization is to improve the instruction of machine studying (ML) models by exposing the neural network to a wide range of domain parameters in simulation. This will assist the model to generalize effectively when it encounters actual world scenarios. In impact, this method assists teach models what to ignore.

Isaac Sim supports the randomization of numerous various attributes that assist define a offered scene. With these capabilities, the ML engineers can make sure that the synthetic dataset consists of adequate diversity to drive robust model functionality.

Simulations can save time and other issues

Image Credit: Nvidia

In actual life, 50 engineers might be working on a project, but they may have only one hardware prototype. With anything like Isaac, all 50 software program engineers could work on it at the similar time, Andrews stated. No longer do all of the engineers have to be in the similar location, as they can work on components of it remotely. And they do not all have to be in the similar physical space.

“I was designing processor cores and people always wanted to simulate it before they had the real hardware, but when their chip came back, the simulator was put on the side,” Andrews stated. “In the robotics use case, I still feel like there’s value for the simulator, even when you have hardware because the robots themselves are expensive.”

On top rated of that, it could be risky to test a robot in the actual world if its controls are not correct. It may run into a human. But if you test it in the Omniverse, the simulation will not hurt anyone.

Over time, Nvidia has added issues like multi-camera assistance, a fisheye camera lens, and other sensors that boost the functions of the robot and its capability to sense the atmosphere. The more elements are enhanced in the actual world, the more the Isaac simulation can be updated in the Omniverse, Andrews stated.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24016885/STK093_Google_04.jpg)