Did you miss today’s livestream? Watch the AI at the Edge & IoT Summit on demand now.

Roughly a year ago, Facebook detailed its work on an AI chatbot named BlenderBot 1., which the business claims is the biggest-ever project of its type. In an extension of that work, Facebook today took the wraps off of BlenderBot 2., which it says is the very first chatbot that can make extended-term memory whilst looking the web for up-to-date facts.

Language systems like OpenAI’s GPT-3 and BlenderBot 1. can articulately express themselves — at least in the context of an ongoing conversation. But as Facebook notes, they endure from quite quick quick-term memory and extended-term memory restricted to what they’ve been previously taught. Moreover, if you told GPT-3 or BlenderBot 1. one thing today, they’ll overlook it by tomorrow. And they’ll confidently state facts that is not appropriate, owing to deficiencies in their algorithms. Because they cannot achieve extra information, GPT-3 and BlenderBot 1. think that NFL superstar Tom Brady is nevertheless on the New England Patriots, for instance, and do not know that he won the 2021 Super Bowl with the Tampa Bay Buccaneers.

By contrast, BlenderBot 2. can query the web making use of any search engine for films, Television shows, and more and each study and create to its extended-term neighborhood memory retailer. It also remembers the context of preceding discussions — a kind of continual mastering. So, for instance, if you talked about Tom Brady with it weeks ago, it could potentially bring up the NFL in future conversations, as it knows that is a relevant subject to you.

“This work combines and refines a number of ideas around retrieval and memory in transformer models and puts them together in a clever way,” Connor Leahy, one of the founding members of EleutherAI, told VentureBeat through e mail. “The results look impressive, especially when one considers how small these models are compared to the likes of GPT-3. I especially applaud the team for addressing shortcomings and potential problems with their method, and for releasing their code and data publicly, which will help the wider research community to scrutinize and further improve these methods.”

Conversational capability

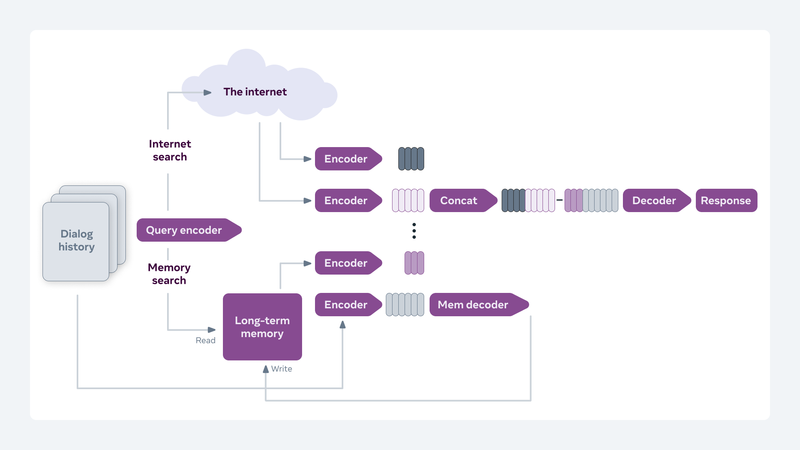

BlenderBot 2. utilizes an AI model based on retrieval augmented generation, an method that enables creating responses and incorporating information beyond that contained in a conversation. During a dialogue, the model, which combines an facts retrieval element with a text generator, seeks germane information each in its extended-term memory and from documents it finds by looking the web.

A neural network module in BlenderBot 2. produces searches offered a conversational context. The chatbot then prepends retrieved information to the conversational history and requires the information into account in deciding what to create. Effectively, BlenderBot 2. reads from its extended-term memory retailer whilst writing, a course of action accomplished by making use of a module that generates the memory to be stored based on conversational content.

In order to train BlenderBot 2.0’s neural networks, Facebook collected information in English making use of a crowdsourcing platform akin to Amazon Mechanical Turk. One of the resulting datasets, Wizard of the Internet, includes human conversations augmented with new facts from web searches, through the Microsoft Bing API. The other, named Multisession, has extended-context chats with humans referencing facts from previous conversation sessions.

Wizard of the Internet supplies guidance to BlenderBot 2. on how to produce relevant search engine queries, as nicely as develop responses based on the search benefits. Meanwhile, Multisession assists the chatbot choose which fresh information to retailer in extended-term memory and what to create offered these memories. In tandem with the Blended Skill Talk dataset, which Facebook developed to give BlenderBot 1. information and “personality,” Facebook says that Wizard of the Internet and Multisession allow BlenderBot 2. to chat simultaneously with a variety of conversational capabilities.

Safety and future actions

Even the most effective language models today exhibit bias and toxicity — it is nicely-established that they amplify the gender, race, and religious biases in information on which they had been educated. OpenAI itself notes that biased datasets can lead to putting words like “naughty” or “sucked” close to female pronouns and “Islam” close to words like “terrorism.” A separate paper by Stanford University Ph.D. candidate and Gradio founder Abubakar Abid information the biased tendencies of text generated by GPT-3, like associating the word “Jews” with “money.” And in tests of a healthcare chatbot constructed making use of GPT-3, the model responded to a “suicidal” patient by encouraging them to kill themself.

In an work to mitigate this, Facebook says that it implemented “safety recipes” in BlenderBot 2. to lessen offensive responses. As measured by an automated classifier, the chatbot was 90% significantly less probably to respond harmfully and 74.5% more probably to give a “safe” response to concerns from actual persons. Facebook also says that, beyond this, its approaches alleviate the threat of BlenderBot 2. spouting dangerous falsehoods “to some extent,” at least compared with preceding approaches.

“We know that safety issues are not yet solved, and BlenderBot 2.0’s approach of utilizing the internet and long-term memory to ground conversational responses brings new safety challenges,” Facebook analysis scientist Jason Weston and analysis engineer Kurt Shuster wrote in a weblog post. “As a research community, we need to address them, and we believe reproducible research on safety, made possible by releases like this, will help the community make important new progress in this area together.”

In experiments, Facebook says that BlenderBot 2. outperformed BlenderBot 1. when it came to selecting up exactly where preceding conversation sessions left off, with a 17% improvement in “engagingness” (as scored by human evaluators) and a 55% improvement in the use of preceding conversation sessions. Furthermore, BlenderBot 2. decreased hallucinations from 9.1% to 3.% and was factually constant across a conversation 12% more frequently.

To spur additional analysis in these directions, Facebook has open-sourced BlenderBot 2. and the datasets used to train it, Wizard of the Internet and Multisession. “We think that these improvements in chatbots can advance the state of the art in applications such as virtual assistants and digital friends,” Weston and Shuster wrote. “Until models have deeper understanding, they will sometimes contradict themselves. Similarly, our models cannot yet fully understand what is safe or not. And while they build long-term memory, they don’t truly learn from it, meaning they don’t improve on their mistakes … We look forward to a day soon when agents built to communicate and understand as humans do can see as well as talk.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/25413751/Fallout_S1_UT_220810_WHIJOJ_00690RC_3000.jpg)